Our lives have been transformed by portable, connected gadgets – most obviously the smartphone, but also a multitude of others, such as smartwatches, fitness trackers, and hearables. These devices combine data collection backed with processing power and wireless connectivity.

But, amongst all their other features, it’s easy to overlook the importance of motion sensors in making our gadgets more functional and intuitive. Whether it’s changing screen orientation on our phones, counting steps on a smartwatch, matching our head’s movements with our XR glasses, or tapping earbuds to change the song, motion sensing is a vital part of the user experience and interface.

For embedded engineers, choosing and integrating motion sensors can be tricky. How can you ensure you pick the right technology to get the accuracy you need, without unnecessarily increasing costs or power consumption? And how should you ensure you get the most out of the sensors, and don’t miss out on performance or features that could improve your end product?

Motion sensors fundamentals

First, a quick recap on what we mean by motion sensors. There are three kinds commonly used: accelerometer, gyroscope, and magnetometer.

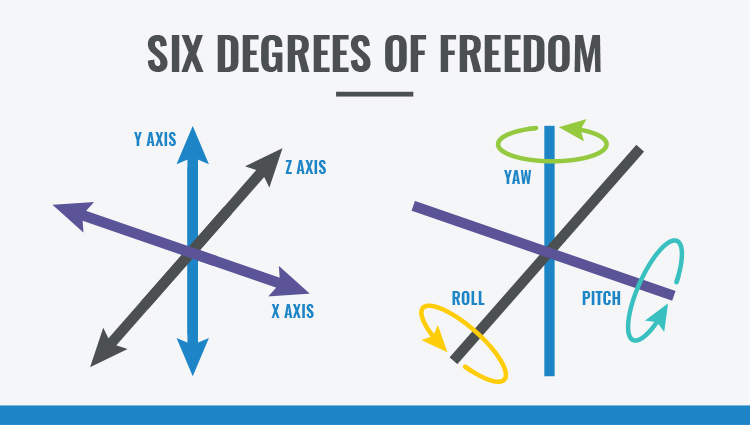

The accelerometer measures acceleration, which can include acceleration due to gravity. This means it can sense the direction of gravity relative to the sensor – basically, which way is up. The gyroscope measures the change in angular position by measuring the angular velocity.

With three degrees of freedom, or axes, for each of the accelerometer and gyroscope, combining both gives us a 6-axis motion sensor, or inertial measurement unit (IMU) (see Figure 1). For many applications, we need to add a magnetometer, which measures the strength and direction of the Earth’s magnetic field, so we can estimate our heading. Adding a 3-axis magnetometer on top of the accelerometer and gyroscope creates a 9 axis IMU.

For the purposes of this post, we are going to discuss IMUs. Though there are certain properties we discuss that can be applied to an accelerometer, gyroscope, or magnetometer on their own, the challenges of combining at least two of these sensors is what we’d like to illuminate.

Figure 1: Motion sensing with an IMU (Source: CEVA)

Keeping it accurate

So now we’ve reminded ourselves of the theory, how does it work in practice? How can we get the accuracy we need?

For an IMU, the accuracy achieved in determining our device’s heading is a basic metric that we can start from. Our requirements might be within a degree or two, while less accuracy could be acceptable for another application. For instance, the accuracy requirements of an XR headset is going to be much tighter than that of a children’s robotic toy. We should then consider the stability of this data, and whether it will vary over time, and with temperature.

Obtaining the highest accuracy output from our IMU requires the use of sensor fusion, which is the concept of combining data from multiple sensors to create a sum greater than its parts. Each sensor of our IMU has different strengths and weaknesses that can be fused together for that solution.

One way to think about sensor fusion is to base it around ‘trust’. In this case, we can use ‘trust’ to mean that the data from a particular sensor has a reasonable level of confidence for its accuracy and relevance. Say you’re the President of a country and have to determine policies. You have an economic, health, and military advisor. Each one gives you input, but they all know different areas. They have guesses on how their opinions would affect their counterparts, but alas they’re only guesses. It’s up to you to process and fuse their information into the best decision.

Similarly, you have two or three ‘advisors’ who you can call on for orientation data from your IMU: the accelerometer, gyroscope, and sometimes the magnetometer. The gyroscope is the easiest one to explain. Consumer grade gyroscopes can be trusted for relative orientation changes over short periods of time of a few seconds, but the output will drift over longer time intervals, tens of seconds plus. The accelerometer is helpful for measuring gravity long term, but can be confused by certain scenarios, such as constant acceleration in a car. We can trust the magnetometer in stable magnetic environments, like in the countryside or the woods, but less so when there is magnetic interference, for example inside an office built with steel pillars.

In short, the gyroscope is accurate for short term measurements, and accelerometer and magnetometer for longer term measurements. With careful understanding of their limitations, their data can be fused together for a more accurate picture of a device’s orientation.

When we have multiple sensor outputs, such as a 9 axis IMU, sensor fusion gives us an opportunity to combine and compare data to improve accuracy. For example, if our sensor fusion software includes an algorithm to detect unexpected or sudden changes in the magnetometer’s output due to magnetic interference, it can then automatically place more confidence in the accelerometer and gyroscope data, until the magnetometer is stable again.

Sample rate is also important for accuracy – does your chosen sensor provide data often enough to meet your needs? This is application-dependent of course: for example, a few readings per second may be plenty for a basic step counter, but 100Hz to 400Hz is recommended for most applications. However, sample rates of 1kHz or higher may be needed for precise head tracking in XR applications (XR is a catch-all term for Virtual, Mixed, and Augmented Reality). A fast sample rate is also important to achieve low latency, which in our head tracking example is the difference between an immersive VR experience and feeling motion sick!

We also need to consider calibration. A lot of sensors are supplied ‘as is’ with little more than what’s written on the datasheet for you to consider. Any sort of calibration that can be done will help to maximize consistent performance between different individual sensors. Factory calibration can be a strong way to improve individual performance but is relatively expensive. Dynamic calibration in the field is another option that requires a detailed understanding of the sensors themselves, or at least of your application. Sensors experience bias that, when unaccounted for, can negatively impact the overall output to a point that exacerbates any existing error.

Finally, we need to think about how to verify the sensor data we’ve obtained and fused together. While this depends on what we’re tracking, the basic principle is using another, independent source of information to give us a truth that we can check our output against. For example, a robot arm could be repeatedly moved very precisely to a known point, and we can then look at whether our sensors give us the same position data. When we detect an error, it may be possible to compensate for it with a calculation, or it may need be flagged as an error that needs further attention.

Enabling new features

Once we’ve got the ability to measure motion with high accuracy, it doesn’t just help with existing applications – it can open up the possibility for new capabilities in portable devices. For example, with hearables (Figure 2), conventional user interfaces are problematic: users don’t want to be committed to pulling out their phone to control them, and the hearables themselves are too small to have convenient buttons. Instead, taps on the hearables can be used to skip a song, and detecting the movement of taking them out of your ears could be used to mute all audio – as this is likely when you’d want the sound to stop anyway. The use of motion and ‘classifiers’, which are algorithms to identify different movements, leads to a more convenient and intuitive user experience.

Figure 2: Hearables (Source: CEVA)

Accurate information from motion sensors can also mean that your portable device has more idea of what’s going on around you, or what activity you’re engaged in. This is known as context awareness, and can be used to deliver a more immersive experience, such as reducing the volume of your music when a hearable detects you’ve walked up to a pedestrian road crossing to let you hear the siren from a nearby ambulance.

For fitness tracking applications, increased precision can differentiate between different user activities. For instance, if your step tracker can measure the size, speed, or other characteristics of your movements, it can work out if you’re just walking normally, or perhaps climbing or descending stairs. Combined with other sensor data, such as air pressure and GPS-derived location, software can build a detailed picture of your movements and estimate the calories burned.

Putting it together: sensor fusion and software

As we’ve discussed, in order to maximize the accuracy and usefulness of motion data, it’s not enough to simply produce a raw data feed. Devices need to process the data, compensate for systematic inaccuracies, as well as combine information from multiple sensors.

There’s a lot to get to grips with here. Sensor fusion is complicated, and takes a certain know-how to achieve the best results in a small package. However, there are various products available that integrate the required sensors and processing – for example the BNO080/085, developed by a partnership between Bosch and CEVA Hillcrest Labs, includes a high-performance accelerometer, magnetometer, and gyroscope, as well as a low-power 32-bit ARM Cortex M0+ MCU.

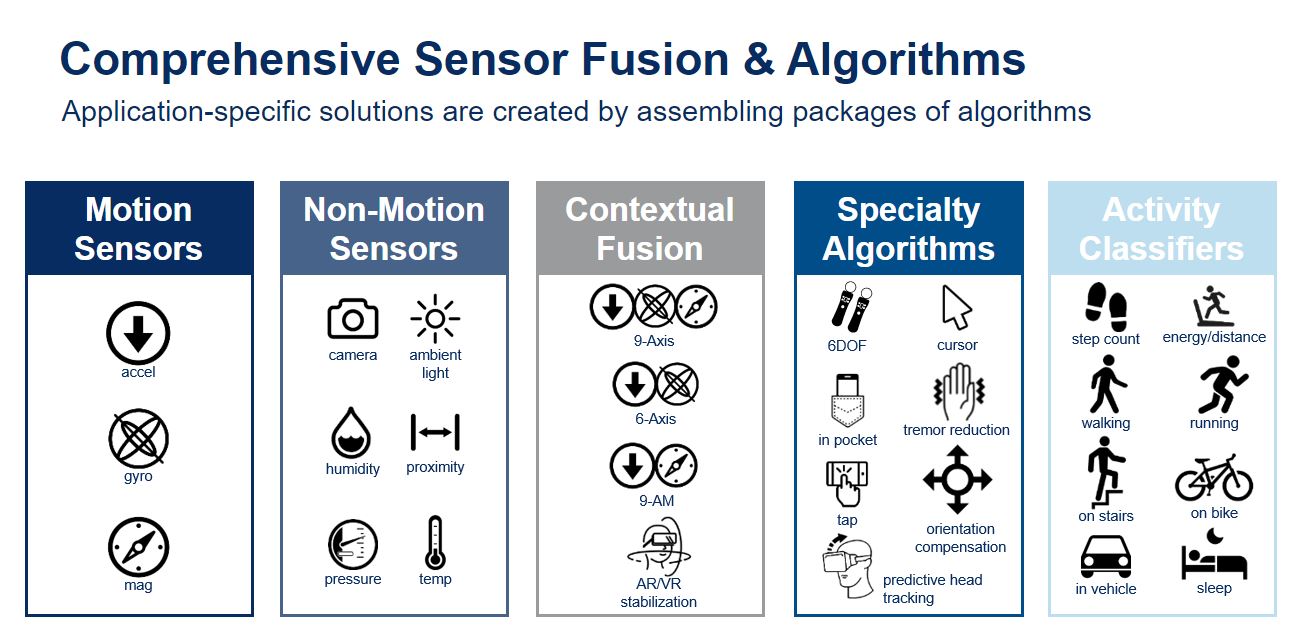

Hillcrest Labs’ MotionEngine™ sensor hub software, which is pre-programmed on the BNO085, provides 6-axis and 9-axis motion tracking, as well as intelligent features such as classifying user activity like walking, running and standing (Figure 3). The MotionEngine sensor hub is compatible with the leading embedded processing architectures and operating systems, with specialized versions available for hearables, smart TV, robotics, mobile computing, remote controls, low-power mobile applications, and more.

Figure 3: Comprehensive Sensor Fusion & Algorithms (Source: CEVA)

Pulling all this data together, in real time, can be challenging, and requires a non-trivial amount of processing performance. It may well be best to choose integrated sensors that run some of these algorithms on an MCU core in the sensor device itself, rather than requiring a main application processor to take on these chores. In particular, for ‘always on’ tasks like step counting, if we can avoid waking the main processor every time a movement is detected, it can stay in a sleep mode – hence reducing overall power consumption, and increasing battery life. To go back to our prior analogy, a SiP focusing on sensor fusion is like having a Vice President deal with certain decisions so that the President, the main processor, can focus on more immediate tasks.

Conclusions

Motion sensors play an important role in many applications, but it’s not always obvious how to choose the right device, and how to achieve the degree of accuracy needed for your application – or even how accurate you need to be. Different use cases require different levels of precision, and have varying requirements for the kind of data needed.

Integrated sensors and sensor fusion can often provide a solution to this problem. By working with a suitable vendor, you can ensure you don’t compromise in obtaining accurate, reliable data, as well as value-added features for specific applications – while keeping cost and power consumption to a minimum.

Published on Embedded.com.