- Solutions

- Products

- Resources

- Company

Investor Relations

Investor RelationsFinancial Information

- Careers

Scalable High-Performance NPU IP for Generative & Edge AI

Ceva-NeuPro-M: A unified hardware and software NPU solution offering scalable performance for advanced AI models across edge and cloud systems

Download Product Note

Webinar on-demand

Future-Proofing AI Chips for Edge & Cloud

Learn how scalable NPU architectures and advanced SDK tools help future-proof AI solutions for edge and cloud systems

Watch now Ceva-NeuPro-M is a scalable NPU architecture, ideal for transformers, Vision Transformers (ViT), and generative AI applications, with an exceptional power efficiency of up to 3500 Tokens-per-Second/Watt for a Llama 2 and 3.2 models

The Ceva-NeuPro-M Neural Processing Unit (NPU) IP family delivers exceptional energy efficiency tailored for edge computing and cloud inference while offering scalable performance to handle AI models with over a billion parameters. Its innovative architecture, which has won multiple awards, introduces significant advancements in power efficiency and area optimization, enabling it to support massive machine-learning networks, advanced language and vision models, and multi-modal generative AI. With a processing range of 2 to 256 TOPs per core and leading area efficiency, the Ceva-NeuPro-M optimizes key AI models seamlessly. A robust tool suite complements the NPU by streamlining hardware implementation, model optimization, and runtime module composition.

Key Features: Performance, Optimization, and Future-Proof AI Design

Performance and Efficiency

- Scalable Architecture: Independent, fully parallel computing design scalable from 2 TOPs to thousands of TOPs in multi-core configurations, achieving up to 5500 tokens/second.

- Power Efficiency: Exceptional intrinsic hardware efficiency delivering 1500 tokens/second per Watt for optimal energy consumption.

- Dynamic Precision Control:

- Hardware support for variable weight precision ranging from 16-bit floating point to 4-bit integer, reducing memory requirements, bandwidth usage, and computational energy consumption.

- Supports mixed precision configurations, including 4×4, 4×8, 4×16, 8×8, and 8×16, enabling optimized performance and flexibility for diverse computational needs.

Advanced Optimization Techniques

- Quantization Excellence: Advanced quantization techniques like Group Quantization, SpinQuant, and FlatQuant optimize model performance for GenAI, LLMs, and multi-modal AI, ensuring high accuracy while reducing memory and computational demands.

- Sparsity and Activation Engines: Eliminates unnecessary memory and compute cycles, boosting overall efficiency.

- Compression: Optional integrated compression and decompression for weights to minimize memory size and bandwidth usage.

Future-Proof Design

- Programmable Vector Processor: Provides adaptability for emerging AI algorithms, ensuring long-term hardware relevance in a rapidly evolving AI landscape.

- System Integration and Integrity: Seamless integration through robust interfaces, modular architecture, and adherence to industry standards; prioritizes data integrity with error-checking mechanisms for consistent performance and compatibility.

- Functional Safety Compliance: ISO 26262 ASIL-B

- Integrated AXI Matrix: Optimized for the Ceva-NeuPro-M parallel architecture

- Full AI Software Stack: Includes Ceva-NeuPro Studio for composing runtime modules

Compatibility with Open-Source AI Frameworks: Including TVM and ONNX

The Solution: Scalable Hardware & Software for High-Performance AI

The Ceva-NeuPro-M NPU IP family is a highly scalable, complete hardware and software IP solution for embedding high-performance AI processing in SoCs across a wide range of edge and cloud AI applications.

Computational Unit Architecture

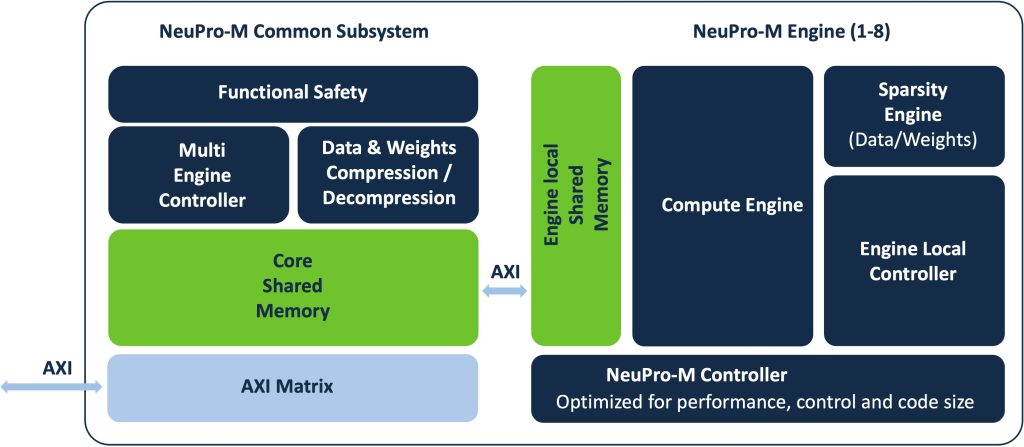

The heart of the NeuPro-M NPU architecture is the computational unit, scalable from 2 to 32 TOPs.

A single computational unit includes:

- Multiple-MAC parallel neural computing engine

- Activation and sparsity control units

- Independent programmable vector-processing unit

- Local shared L1 memory

- Local unit controller

Core & Mutli-Core Clusters

A core may contain up to eight computational units, along with a shared Common Subsystem comprising:

- Functional-safety

- Data compression

- Shared L2 memory

- System interfaces

NPU cores can be grouped into multi-core clusters to reach performance levels of thousands of TOPs

Benefits: Scalable, Efficient, and Ready-for-Edge AI Workloads

Scalable AI Performance

- Empowers edge AI and cloud inference system developers with computing power and energy efficiency for complex AI workloads.

- Enables seamless deployment of multi-modal models and transformers in edge AI SoCs.

- Delivers scalable performance for real-time, on-device processing and cloud inference workloads.

Flexibility Across Applications

- Scales from smaller systems such as security cameras, robotics, or IoT devices (starting at 2 TOPS) to thousands of TOPS in multi-core systems.

- Supports LLMs and multi-modal generative AI using the same hardware units, core structure, and AI software stack.

Built-In Optimization Support

- Provides direct hardware support for critical model optimizations:

- Variable precision for weights

- Hardware-supported addressing of sparse arrays

- Each computational unit includes an independent vector processor for novel computations that may be required in future AI algorithms.

- Supports functional safety compliance: ISO 26262 ASIL-B.

Rapid Implementation

With enormous scalability and the Ceva-NeuPro Studio full AI software stack, the NeuPro-M family is the fastest route to a shippable implementation for an edge-AI chip or SoC.

Related Markets

- Consumer IoT

- Automotive

- Infrastructure

- Mobile

- PC

- Cloud inference