Robot navigation systems can be complicated to design — they need software algorithms, electronics, and mechanical components. They must be able to move accurately, and they need sensors to gather information about their surroundings. The design process must also include thorough testing.

In this article, we’ll introduce some of the key points of robot navigation systems and the sensors they need, using the example of a wheeled robot vacuum cleaner, and focusing on “intelligent walk” navigation. If you’re looking to design a robot, and specifically its sensor and navigation systems, we’ll cover enough of the fundamentals to point you in the right direction, if you’ll pardon the pun.

Types of navigation systems

For our robot vacuum, there are three main classes of navigation systems:

- Random walk. Using proximity sensors and wall-bump sensors, our robot will bounce around its environment in a mostly random fashion. It will cover a given area eventually but must stop cleaning with enough energy left to find its way back to the charger or risk getting stranded with a dead battery. Inexpensive but inefficient.

- Intelligent walk. These robots aim to improve the user experience and extend battery life by making smart use of some combination of wheel encoders, inertial measurement units (IMUs), and optical flow sensors. These sensors enable the robots to clean in a smarter pattern and find their way back to their charger more efficiently.

- Simultaneous location and mapping (SLAM). SLAM robots add a LiDAR laser-based sensor or wide-angle camera to map their environment and navigate in complex areas. These sensor components add to the hardware cost while also requiring a more powerful processor to handle the data. These robots may still fall back on intelligent-walk strategies to handle dark or featureless areas.

Proximity and wall-bump sensors for the random walk are fairly straightforward in their purpose, but what about the sensors for the intelligent walk and SLAM techniques? Let’s look at these in a little more detail and see what sensors they need to determine position. While SLAM is beyond the scope of this article, the basic principles of combining data from multiple types of sensors still apply.

Sensors for dead reckoning

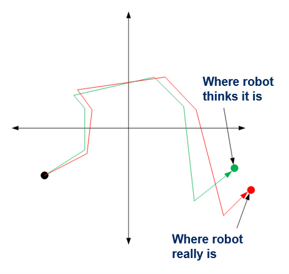

The key to the intelligent walk is “dead reckoning,” which means the process of estimating the robot’s location relative to a starting position, using measurements of speed and direction, over time.

Inevitably, the estimated position will diverge from the true position due to small measurement and estimation errors that add up. Because dead reckoning doesn’t use any absolute reference points, minimizing the rate of this position error growth is important.

Dead-reckoning error

Let’s review the sensors involved and find out where these errors come from.

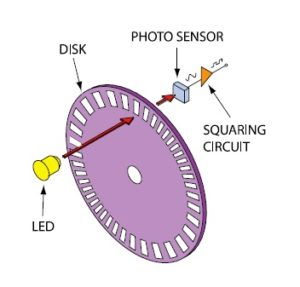

Wheel encoders are the first sensors we want to consider, which use optical or magnetic mechanisms to measure the rotation of its wheels (forward and reverse). These sensors register a “tick” for each fraction of the wheel rotation, and the ticks calibrate to a known distance around the circumference of the wheel, corresponding to a distance traveled along the floor. The finer your tick resolution, the more precisely you can measure the wheel distance.

Wheel encoder components

Inevitably, wheel encoders lose information when the wheels slip and skid over different surfaces. To improve the dead-reckoning accuracy, we can combine our wheel encoder with an IMU. The IMU comprises an accelerometer and gyroscope, and sometimes an additional magnetometer.

Accelerometers track the force of linear acceleration and, when there is no movement, the direction of gravity. This helps provide a long-term measure of orientation. Gyroscopes track angular velocity and produce short-term orientation measurements. Magnetometers measure the magnetic field around them and provide long-term orientation based on this field.

Combining the IMU with the wheel encoder goes a long way in improving accuracy, but there are still errors, like drift, that combine and build up over time. We can boost the precision by adding another component: an optical flow sensor.

This tracks the movement of the floor below the robot to provide a 2D estimate of velocity. It’s the same technology as used in a computer mouse, but on a larger scale: It illuminates the floor, detects tiny features on the surface, and measures their movement between frames.

The optical flow sensor tracks movement immune to inaccuracies caused by wheel slippage. However, its accuracy depends on being able to determine the distance between the sensor and the floor. It is also vulnerable to very smooth or dark floors, which don’t have enough features for reliable tracking.

Sensor fusion: bringing it together

Once we have multiple sensors, their data needs intelligent combining to give overall figures for position, orientation, and speed as accurately as possible. This process is called “sensor fusion.” With multiple data streams from different kinds of sensors, this process can be used to calibrate the measurements by comparing what they tell us.

We also need to consider the real-world environment our robot will operate in. Different floor surfaces can make a big difference to optical flow sensors and wheel encoders, for example, and changes in temperature can introduce inaccuracies to IMU measurements.

Bringing this data together requires suitable software to combine and compare data and to analyze discrepancies and errors. This may sound complex and time-consuming, and in practice, no product designer is going to want to go back to first principles to get usable data.

Instead, pre-integrated solutions, including hardware and software, can enable huge time savings. CEVA has spent nearly 20 years studying and characterizing sensors to produce feature-packed sensor-fusion systems. The company’s dynamic calibration algorithms deal with errors such as zero-g offset, which is a measure of accelerometer bias, in real time and over varying temperatures.

CEVA has developed a robotic dead-reckoning product called MotionEngine Scout, which intelligently fuses the data between wheel encoders, optical flow, and IMU sensors. This fusion from Scout also cross-calibrates each sensor using information from the others. Scout achieves trajectory accuracies 5× to 10× better than optical flow sensors or wheel encoders alone.

To enable this improved accuracy to be easy to use, CEVA’s sensor-fusion software packages simplify the complexities of working with multiple sensors. By providing a simple interface for robot navigation, MotionEngine Scout and CEVA’s other software help reduce time to market and enable system designers to confidently select and combine the right sensors for their products.

Read Part 2: Specifying and Qualifying Sensors for Robot Navigation

Read part 3: Testing and analyzing the success of your robot navigation system

Read part 4: Dead Reckoning Shows the Way for Robots

Read more in the full White Paper – Here.

Published on EE Web.