Last month, a computer program, AlphaGo, defeated the top-ranking professional player, Lee Sedol, in the ancient game of Go. The significance of this milestone in the progress of artificial intelligence (AI) cannot be exaggerated. At the time of the match, Lee Sedol was ranked as one of the top five players in the world, with the highest rank in Go, 9-dan. Previously, AI experts thought that this achievement was at least a decade away. The excitement from this accomplishment is still spreading, and has even created a shortage in Go board games, due to the increased demand.

Other games that have been mastered by computers, like checkers and chess have a much smaller realm of possible moves. The programs used to solve these problems start by using a data base of existing games, and then move to brute force once the possibilities have been narrowed down sufficiently. According to the developers of AlphaGo, the game of Go is a googol (10100) times more complex than chess. This makes the brute force method completely irrelevant, and requires a highly advanced approach, which I’d like to take a deeper look at.

Deep Neural Networks and Deep Reinforcement Learning

AlphaGo was developed by DeepMind, a company which was acquired by Google about two years ago. In this Google Zeitgeist lecture, Demis Hassabis, founder and CEO of DeepMind, points out the difference between “narrow” AI and Artificial General Intelligence (AGI). The G for general is what makes DeepMind’s technology so interesting. The developers at DeepMind insist on making their programs as general as possible. This is opposed to conventional AI programs, which are designed to perform one specific task. The learning phase of all AGI programs at DeepMind is performed by supplying input and a goal, and allowing the deep neural networks to figure out the best way to achieve the goal. This allows the program to emulate the way humans interact with the world as closely as possible. Specifically, the AlphaGo program combines a heuristic search algorithm called Monte Carlo tree search with two deep neural networks working in conjunction.

The basis for this approach is found in neuroscience, in which Hassabis holds a PhD, in addition to his computer science education. Neural networks are inspired by the way our brains work, and are the basis for the system. Reinforcement learning is the way the program learns to perform tasks, by setting a goal and a reward for success. This way of learning is inspired by behavioral psychology. It is used in AlphaGo to play against itself and thus improve not only from examples of existing games, but games that may have never been played before. The depth and layers of these systems make them capable of handling such complex tasks, like the game of Go. This type of learning could lead to the same system being able to perform a wide variety of tasks. Maybe the next step is combining it with this program that wrote prize-winning literature (almost)?

All the Big Dogs Want AGI

About twenty years ago, a similar historic milestone in the development of AI was reached. IBM’s Deep Blue defeated Kasparov, who was then ranked as the top chess player in the world. At the time, many people doubted that computers could defeat humans in chess. It was considered a game that required great creativity and superior strategic thinking, which were thought to be uniquely human. The hardware used to accomplish this feat was enormous and expensive. Today, super-human level chess programs are ubiquitous and can run on the processor of any smartphone.

Today IBM continue their pursuit of true artificial intelligence with Watson, a technology platform capable of analyzing unstructured data. But they aren’t alone anymore. Facebook, Baidu, Microsoft, Elon Musk and practically everybody who’s anybody is in the race to achieve AGI. It makes sense when you think about it. Once you solve intelligence, you can use it to solve everything else. It’s kind of like the first wish you would ask for if you met a magic genie – an infinite amount of wishes.

Next Stop: Handheld AGI

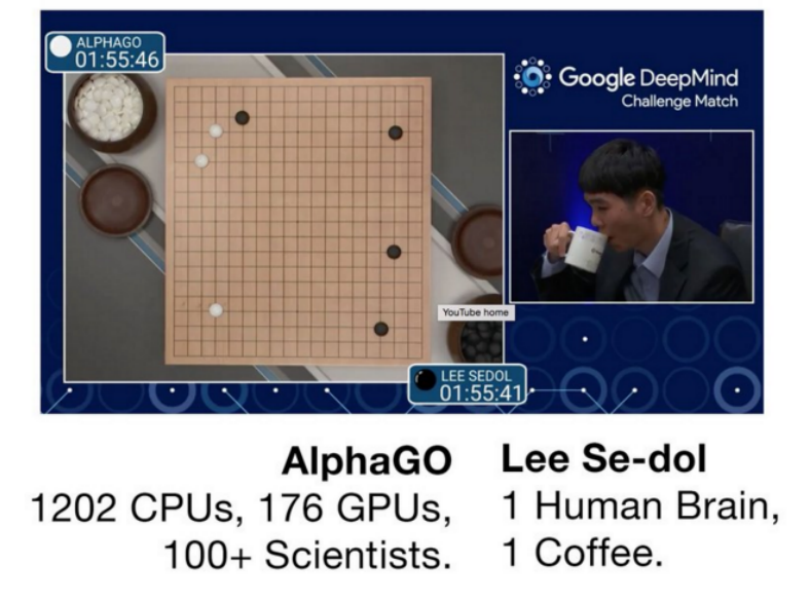

So what’s the processing power behind all of this? Recently, the developers of AlphaGo published a paper in Nature with a detailed explanation of how it works. According to the paper, the program was tested in many versions with a different amount of processing power for each one. The versions were given scores according to their performance. Naturally, the more processing power, the higher the score. The largest distributed version, running on multiple machines, used 40 search threads, 1920 CPUs and 280 GPUs. In all cases, search time was limited to five seconds per move.

According to this analysis, the distributed AlphaGo system uses about 1 megawatt, compared to only 20 watts used by the human brain. In other words, AlphaGo consumed about 50,000 times more energy than Lee Sedol. As the algorithms advance towards mimicking the inner workings of the human brain, the machinery running the algorithms strives to achieve the incredible low-power consumption of the brain. In the meantime, this massive computation power is far from being feasible on low-power embedded platforms.

There is still a lot of work before this type of AI can fit in the palm of our hand. Significant progress is being made in two converse fronts. The algorithms are becoming more efficient on one hand, and the hardware is becoming more powerful on smaller size and lower power consumption, on the other. The challenge lying ahead is to run these networks on embedded platforms such as our CEVA-XM4 processor and make them available to the mass market.

Is the Future of Humankind in Danger?

Undoubtedly, this technology holds many dangers in its vast and overwhelming potential. I expressed some of these concerns in a recent post entitled Will our Deep Learning Machines Love Us or Loath Us? This is probably the motivation behind the AI community’s support for this open letter, which aims to make sure that we “reap its benefits while avoiding potential pitfalls”. This fear isn’t just some far-fetched idea with a few fringe supporters. The letter is signed by Demis Hassabis, Stephen Hawking, Elon Musk, Steve Wozniak, and many others, including AI experts at Google, Microsoft and Facebook and researchers from many of the leading universities. Right now, it seems that we’re pretty far away from any program that could threaten humanity. But, as we’ve seen from AlphaGo, things are advancing faster than anticipated. Let’s hope that this early awareness of the threats that lie ahead really helps to keep our own creations under control.