Some technologies get leveraged increasingly with growth in new markets and accompanying technical requirements. One such technology is digital signal processing (DSP), either in the form of a chip or as an IP core ready for system-on-chip (SoC) integration. While DSPs have been around for a long time, newer generations of DSPs support features that are important for addressing certain markets. One such market is the Internet of Things (IoT). Due to the nature of many IoT devices, they benefit from running a real time operating system (RTOS). This article looks at how DSPs and the RT-Thread RTOS are used together for IoT applications.

Evolution of DSP Technologies

The purpose of a DSP is to convert and manipulate real-world analog signals which are continuously valued by nature. The manipulation is performed through signal processing algorithms. As a technology that has been around since the 1980s, DSPs have evolved a lot in terms of hardware features and software development tools and infrastructure. During the earlier years, algorithms were programmed onto the DSP in assembly language. As the markets for DSP expanded and the algorithms got more complex, the architectures evolved to facilitate development of high-level-language compilers.

A chip with an embedded DSP core includes on-chip memory that is often enough to contain the complete program necessary to perform the dedicated tasks. Modern day DSP applications range from audio/speech processing, image processing, telecom signal processing, sensor data processing and control systems. Then there is the IoT market, which covers a combination of these applications in a multitude of use cases. Industry analyst firm Markets and Markets projects that the global IoT technology will grow to $566.4 billion by 2027.

Why is DSP a Good Match for IoT Devices?

IoT is all about communications and connecting things in the real world with data gathered through the use of different types of sensors. A DSP is built for analyzing and processing continuously varying signals received from sensors. DSPs are designed for analyzing and processing real-world signals like audio, video, temperature, pressure or humidity. For example, the CEVA-SensPro2 sensor hub DSP family is designed for processing and fusing multiple sensors, and Neural Networks inferencing for contextual awareness. DSP tasks involve repetitive numeric computations in real-time with precision and accuracy. As the IoT market growth drives deployment of more and more sensors, all the data collected needs to be processed power-efficiently and in real-time. There is a big push toward processing of data right on the IoT device rather than sending it over to the cloud for processing.

Another trend that is taking place now is the increasing use of artificial intelligence (AI) based algorithms for processing data locally on the IoT devices. AI algorithms are neural network model based and require a high level of parallelism for efficient execution. Computational parallelism is a key strength of a DSP over a general-purpose central processing unit (CPU), and in order to meet that requirement, modern DSP architectures are using wide vector and single instruction multiple data (SIMD) capabilities.

In a nutshell, a powerful DSP-based solution can satisfy the high-performance compute need and the low-power requirement of modern day IoT devices.

Why is DSP a Good Match for RTOS?

Just as a DSP is a specialized processor, an RTOS is a specialized operating system. A DSP is dedicated to processing real-world data extremely fast and reliably. A RTOS is dedicated to meeting specific timing requirements of response/reaction time reliably. As a DSP is compact compared to a general purpose CPU, so is a RTOS compared to a regular operating system. These characteristics match the requirements of IoT devices, making DSPs and RTOSs perfect matches for IoT applications.

Historically, embedded devices typically had a single dedicated purpose and most often could do with an 8-bit or 16-bit microcontroller. The devices could manage without a RTOS. But today’s IoT devices are more complex and need a 32-bit combined CPU and DSP with a RTOS to manage control functions and run complex signal processing tasks.

The question to ask is, can a modern DSP be sufficient to handle both the signal processing and the control functions of an IoT device. The answer is, yes. A hybrid DSP architecture that offers both DSP-oriented features and controller-oriented features is finding rapid adoption for IoT and other embedded devices. This hybrid DSP supports very low instruction word (VLIW) architecture implementation, single instruction multiple data (SIMD) operations, single-precision floating point, compact code size, full RTOS, ultra-fast context switching, dynamic branch prediction, etc. This eliminates the need for an additional processor on an IoT device to run the RTOS.

DSP Oriented RTOS

A DSP-based RTOS is oriented to leverage the high-performance features of a DSP. It is a preemptive, priority-based, multi-tasking operating system and offers a very low interrupt latency. These RTOSs come with drivers, an application programming interface (API) and runtime chip support libraries (CSL) of DSP functions specific to a DSP chip. All on-chip peripherals such as the cache, direct memory access (DMA), timers, interrupts unit, etc., can be controlled. This enables the IoT application developers to easily configure the RTOS to handle resource requests and manage the system.

RT-Thread, a RTOS for IoT Applications

RT-Thread is an open-source real-time operating system (RTOS). It demonstrates the characteristics of a very low resource occupancy, high reliability, high scalability RTOS that is optimized for IoT devices. RT-Thread is supported by rich middleware, along with a broad hardware and software ecosystem required for IoT devices. It supports all mainstream compiling tools such as GCC, Keil, IAR, etc., and a variety of standard interfaces, such as POSIX, CMSIS, C++application environment, Micropython, and Javascript. RT-Thread also offers great support to all the mainstream CPU and DSP architectures. Inter-thread communication and synchronization are handled efficiently and consistently through services such as RTOS messaging, semaphores, etc., RT-Thread comes in a standard version for use with resource-rich IoT devices and a Nano version for use with resource-constrained systems. For more details, refer to their website at https://www.rt-thread.io/. As of Dec 2021, RT-Thread was reported to be powered on 1.5 Billion devices. [Source: RT-Thread]

CEVA DSP and RT-Thread RTOS

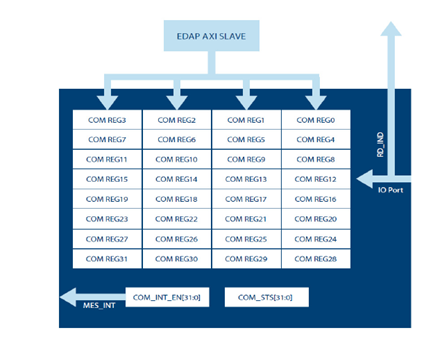

Because CEVA’s DSP architectures are natively designed to support RTOS features and ultra-fast context switches, an IoT device implemented with CEVA DSP and RT-Thread RTOS can handle many communications tasks between different resources without interrupting the RTOS for this. For example, the multi-core communication interface (MCCI) mechanism enables communication of commands and messaging between the cores. Communication between the cores is achieved by using the AXI slave ports to directly access dedicated command registers. The DSP has dedicated controls and indications to follow the status of the communication via the MCCI.

Multi-core Communication Interfaces

Messaging between the cores is performed by using the MCCI_NUM-dedicated command registers of 32 bits each. The 32-bit COM_REGx registers are written by the external core via an AXI slave port and are read-only by the core. The command initiator core can write a maximum of four registers simultaneously (128-bit AXI bus) or eight registers simultaneously (256-bit AXI bus).

When the core initiating the command outputs the command to COM_REGx, the addressed registers are updated and the relevant status bits in the COM_STS registers are updated as well. In addition, an interrupt (MES_INT) is asserted to notify the received core.

After the receiving core reads one of the COM_REGx registers, a read indication is sent to the initiator. The read indication is sent by the receiving core using a dedicated RD_IND (Read Indication) MCCI_NUM-bit bus interface. Every bit of the RD_IND bus denotes a read operation from one of the COM_REGx registers respectively. Using the IO interface, the receiving core can read only one COM_REGx register at a time. Making it easier not only to synchronize between different cores but also between different tasks on these cores.

CEVA has ported many different RTOSs to their DSP product offerings. The most recent addition is the support for both the regular version and the nano version of RT-Thread RTOS. RT-Thread has been ported to multiple CEVA DSPs such as SensPro2, CEVA-BX1, CEVA-BX2, CEVA-XC16, and others. For more details about these CEVA IPs, refer to www.ceva-ip.com.

Published on Embedded.